In Davos this week (World Economic Forum, January 2026), Nvidia CEO Jensen Huang made a point that sounds simple but lands heavy: AI isn’t slowing down, it’s running into a different limit, how fast we can build the physical world underneath it.

When people hear “trillions of dollars,” they picture one thing: chips. But Huang’s message was broader. He was talking about the full system, data centers, electricity, networking gear, cooling, software, and the people who keep it all running. Like buying a jet engine and realizing you still need the airplane.

The big idea is pretty grounded: AI growth isn’t just about smarter models. It’s constrained by power grids, permits, server supply chains, and the real estate needed to house it all. If those move slowly, AI rolls out slowly, no matter how good the demos look.

What “trillions for AI infrastructure” really means (it is way more than GPUs)

Huang’s “trillions” line hits because it reframes the debate. A lot of the public talk treats AI as software magic that scales like an app store download. In reality, modern AI scales more like heavy industry. It needs factories, utilities, and long lead times.

He also pushed back on the “AI bubble” framing. His logic is pretty practical: the investments look huge because the foundation is huge. A few hundred billion dollars can disappear fast when you’re building data centers, upgrading grids, and buying fleets of servers at once.

You’ll hear eye-popping numbers thrown around too. One estimate Huang has referenced in recent remarks is that the total ecosystem opportunity could reach around $85 trillion over 15 years. Don’t get hung up on the exact figure. The more useful takeaway is what it implies: this is not a one-year capex cycle, it’s a long build.

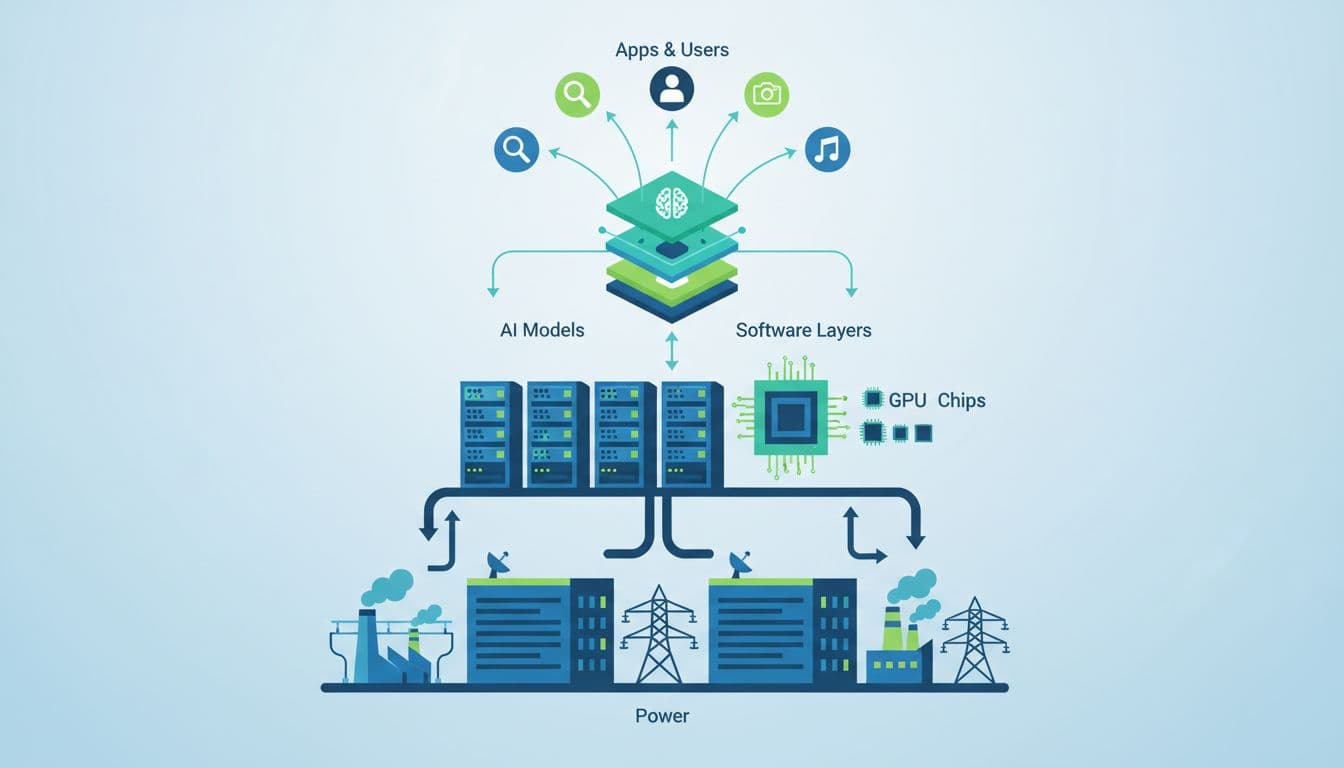

Here’s a simple way to picture the “stack” he’s pointing at:

| AI layer (bottom to top) | What it includes | Why it costs so much |

|---|---|---|

| Energy | Power generation, grid upgrades | Long projects, permits, materials |

| Compute hardware | GPUs, CPUs, memory | High demand, specialized manufacturing |

| Cloud systems | Servers, storage, orchestration | Massive scale, constant refresh |

| Models | Training, tuning, safety work | Compute-heavy, ongoing iteration |

| Apps | Products, workflows, integrations | Adoption takes time, needs reliability |

If you want more context on how Nvidia frames AI infrastructure as a multi-trillion industry, their own perspective is laid out in Nvidia’s AI infrastructure “trillions of dollars” vision.

The parts people forget: buildings, power, cooling, and the “plumbing”

A data center is basically an AI factory, but it’s also a building with boring needs. Land. Concrete. Transformers. Water (sometimes). Fire suppression. Security. And lots of paperwork.

The best everyday comparison I’ve heard is a restaurant. A fancy stove doesn’t make a restaurant. You still need the kitchen, gas line, vents, staff, fridges, and a building that passes inspection. AI compute is the fancy stove. The rest is the stuff everyone underestimates until the bill shows up.

Cooling is another sleeper cost. AI servers run hot, and heat is the enemy of uptime. Keeping thousands of high-power chips stable requires industrial-grade cooling designs, redundancy, and constant maintenance. Add backup power systems, and you’re now talking about diesel generators, batteries, and grid interconnection work that can take ages.

An AI-scale data center complex with heavy cooling and power infrastructure, created with AI.

An AI-scale data center complex with heavy cooling and power infrastructure, created with AI.

Servers, networking, and software that make the chips useful

A GPU on its own is like an engine on a pallet. It doesn’t do anything until it’s in a working system.

To make modern AI training and inference run, companies need dense servers, racks, high-speed interconnects, fast storage, and software that can coordinate it all without falling apart. Networking matters a lot here because AI workloads often split across many GPUs at once. If the network is slow or unreliable, your expensive chips sit idle. That’s money burning in real time.

Then there’s the software layer: the model training stacks, data pipelines, monitoring, security, and tools that keep performance stable. This is why Huang keeps coming back to “systems,” not just chips. The cost is in the full machine.

Why the spending is accelerating now, even after huge investments

So why does the spending keep climbing even after massive purchases in 2024 and 2025?

Because AI is moving from experiments to normal work. Once a team relies on AI daily, “good enough” starts to feel expensive. They want faster responses, lower costs per task, better reliability, and more privacy. Each of those pushes demand for more compute.

Huang’s view is also pretty intuitive: as AI gets cheaper and faster to run, people use it more. That extra usage then forces more buildout. It’s the same pattern we saw with the internet, more bandwidth created more video, which created more demand for bandwidth.

If you track the Davos agenda, the pace of change is obvious. The World Economic Forum’s live updates give a sense of how central AI and infrastructure were to the week’s conversations, not as a side topic but as a core theme: highlights from Davos 2026 day 3.

Real-world AI use is spreading, so compute demand keeps climbing

Look at where AI is landing right now:

Health care uses it to read images faster, sort cases, and help clinicians catch details. Finance uses it for document work, risk checks, fraud signals, and customer support. Manufacturers use it for vision systems, quality control, and predictive maintenance. Robotics is creeping forward too, with AI improving how machines see and plan.

Huang also highlighted robotics as a major opportunity, especially in Europe. CNBC covered his view on the scale of that opening: Huang on Europe’s robotics opportunity.

The common thread is simple: real deployments are steady, not flashy. They don’t always make viral demos. But they do rack up compute bills, because they run all day.

AI-powered industrial automation in a real factory setting, created with AI.

AI-powered industrial automation in a real factory setting, created with AI.

The “AI factory” idea: spending money to produce intelligence at scale

Huang’s “AI factory” framing is useful because it makes the economics less mysterious.

A traditional factory turns raw materials into physical goods. An AI factory turns electricity and compute into outputs like predictions, summaries, code suggestions, design drafts, automated workflows, and agents that do routine tasks. The output isn’t a car door, it’s “work” in digital form.

That’s why companies keep buying infrastructure. If they believe AI factories will generate real productivity, they treat compute as capacity. More capacity means more output, which means more revenue or lower costs, at least in theory.

Some coverage goes even more concrete on what “trillions” could mean by decade’s end. For example, CoStar reported Nvidia’s expectation that global AI infrastructure spending could approach multi-trillion levels: AI infrastructure spending approaching $4 trillion. You don’t have to accept every projection to see the pattern: the buildout is being planned like a long industrial cycle, not a short tech fad.

Jobs, the AI “bubble” debate, and what regular workers should take from it

The jobs conversation at Davos had some honest friction in it, and that’s healthy. Huang tends to describe AI as a tool that raises what people can do. Other leaders, including in finance, have pointed out that some roles are being substituted right now.

Both can be true at the same time. Tech waves usually arrive like that. They upgrade some jobs, compress others, and create new ones that didn’t exist five years earlier.

In the Yahoo Finance discussion around Davos, one detail stood out: the old fear that radiologists would be “gone in 10 years” hasn’t played out that way. Instead, radiology teams often use AI to assist with detection and workflow, which can improve results and speed. At the same time, leaders like Larry Fink have warned about substitution in certain analyst-heavy roles in finance and law. Nobody can promise a clean outcome. It’s going to be uneven.

AI as a tool, not a monster, plus why some layoffs still happen

Thinking of AI as a tool changes the mood. Tools don’t “want” anything. They amplify the person holding them.

But tools also shift the value of tasks. If AI can draft a first-pass memo in 30 seconds, the entry-level work that used to train juniors can shrink. That’s where layoffs or role changes can show up, not because a whole profession disappears overnight, but because the mix of tasks inside jobs changes.

Health care is a decent example of the “tool” story. AI can flag anomalies, prioritize scans, and reduce missed findings. That tends to support the clinician rather than replace them. In contrast, document review and basic analysis in finance or law can be easier to automate, so headcount pressure is real.

Everyday office work where AI supports decisions and drafts, created with AI.

Everyday office work where AI supports decisions and drafts, created with AI.

A simple playbook for staying valuable as AI spreads

The safest mindset I’ve found is a little plain: treat AI like a strong assistant that sometimes gets confused.

Learn one or two tools well enough that you can get reliable output, then build the habit of checking it. Not in a paranoid way, just in a normal “I’m accountable for this” way. People who can verify, edit, and apply AI output to real situations tend to move faster, and they make fewer messy mistakes.

Also, don’t ignore the human parts of work. Clear writing, good judgment, calm communication, knowing your customer, knowing your craft. AI can help, but it can’t replace trust. That’s why the line from the Davos chatter sticks: AI might not take your job, but someone who uses AI well could.

What I learned from Huang’s “trillions” message (my personal take)

I used to talk about AI like it was mostly software. Then I tried to run larger tasks myself and, honestly, I hit the wall fast.

A few months ago, I tested a heavier model workflow on a decent machine, thinking I’d just wait a bit longer. Instead, I ran into the usual trio: not enough memory, slow performance, and that creeping feeling of “maybe I should just rent cloud compute.” When I did, the cost stacked up quickly. Nothing outrageous, but enough to make me pause and re-check what I was doing.

That experience made Huang’s point feel less like CEO hype and more like physics. AI isn’t free. It’s electricity, hardware, cooling, and time. When you scale from one person experimenting to thousands of companies running AI all day, the bottleneck stops being clever prompts. The bottleneck is power, space, supply chains, and the ability to build reliably.

This is also where “trillions” starts to sound less dramatic. If the base layers are energy and data centers, and the top layers are models and apps, the bill naturally spreads across industries. Nvidia sells a big piece of the stack, but it’s still just one piece.

For a related read on how Nvidia fits into the broader AI infrastructure surge, this internal overview ties the ecosystem together well: How Nvidia is fueling AI infrastructure growth in 2025.

A simple view of the AI stack, from power to apps, created with AI.

A simple view of the AI stack, from power to apps, created with AI.

Conclusion

Huang’s Davos message lands because it’s not mystical. It’s physical. His argument is that we’re early in the infrastructure cycle, which is why the biggest spending is still ahead, and why “trillions” is about far more than chips.

If you’re watching what happens next, keep an eye on data center expansion, grid and power projects, server supply chains, and whether more companies turn AI from a pilot into a daily utility. AI will reshape work too, but not in one clean sweep. The practical stance is calm and steady: treat AI like a tool, learn it well, and pay attention to the very real pipes and wires that make it run.

0 Comments