Two top AI labs shipped major coding models on the same day in February 2026. That timing alone makes it feel like an AI war, not the meme kind, the product-and-benchmarks kind where devs wake up and their daily tools behave differently.

Before we compare them, quick plain-English definitions. A coding model is the part that writes and edits code. An agent is the part that keeps going: it plans steps, uses tools, runs commands, checks outputs, and retries until the job is done (or it gets stuck). That “keep going” loop is what both OpenAI and Anthropic are chasing hard.

This post breaks down what GPT-5.3-Codex and Claude Opus 4.6 are actually better at, what the benchmarks really say (without worshipping charts), and what changes for real teams shipping software.

Split-screen view of the two competing approaches, terminal-heavy execution vs long-context collaboration (created with AI).

Split-screen view of the two competing approaches, terminal-heavy execution vs long-context collaboration (created with AI).

Why GPT-5.3 Codex is a big deal for real agent coding

OpenAI’s message with GPT-5.3-Codex is pretty direct: this is the model for people who live inside editors and terminals, and want faster “agent loops.” OpenAI claims it runs about 25 percent faster for Codex-style workflows, which sounds like a small product note until you’ve watched an agent do 30 to 80 steps. Speed changes the feel of the whole thing, fewer pauses, more iteration, more “try again” without you losing momentum.

A big part of that comes from how Codex is packaged now. The newer Codex app experience is built around longer tasks and even multiple agents, not just one chat window. It also pushes frequent progress updates, so you can see what it’s doing, then nudge it mid-run. OpenAI even talks about steerability settings (think “follow-up behavior”), which is basically permission for the agent to keep checking in instead of disappearing into a long silent run.

There’s also a quieter flex in the background: OpenAI said early versions of GPT-5.3 helped with internal engineering work tied to training and shipping the model itself, like debugging parts of the training run, diagnosing evals, and supporting operational tasks such as scaling GPU clusters as load changes. That matters because it suggests the model isn’t only good at neat demos, it can handle messy internal workflows too.

If you want OpenAI’s own framing and eval highlights, see the official release page, Introducing GPT-5.3-Codex. For a broader view of where agent-style tools are headed this year, I laid out the bigger shift in The AI of 2026 will be different.

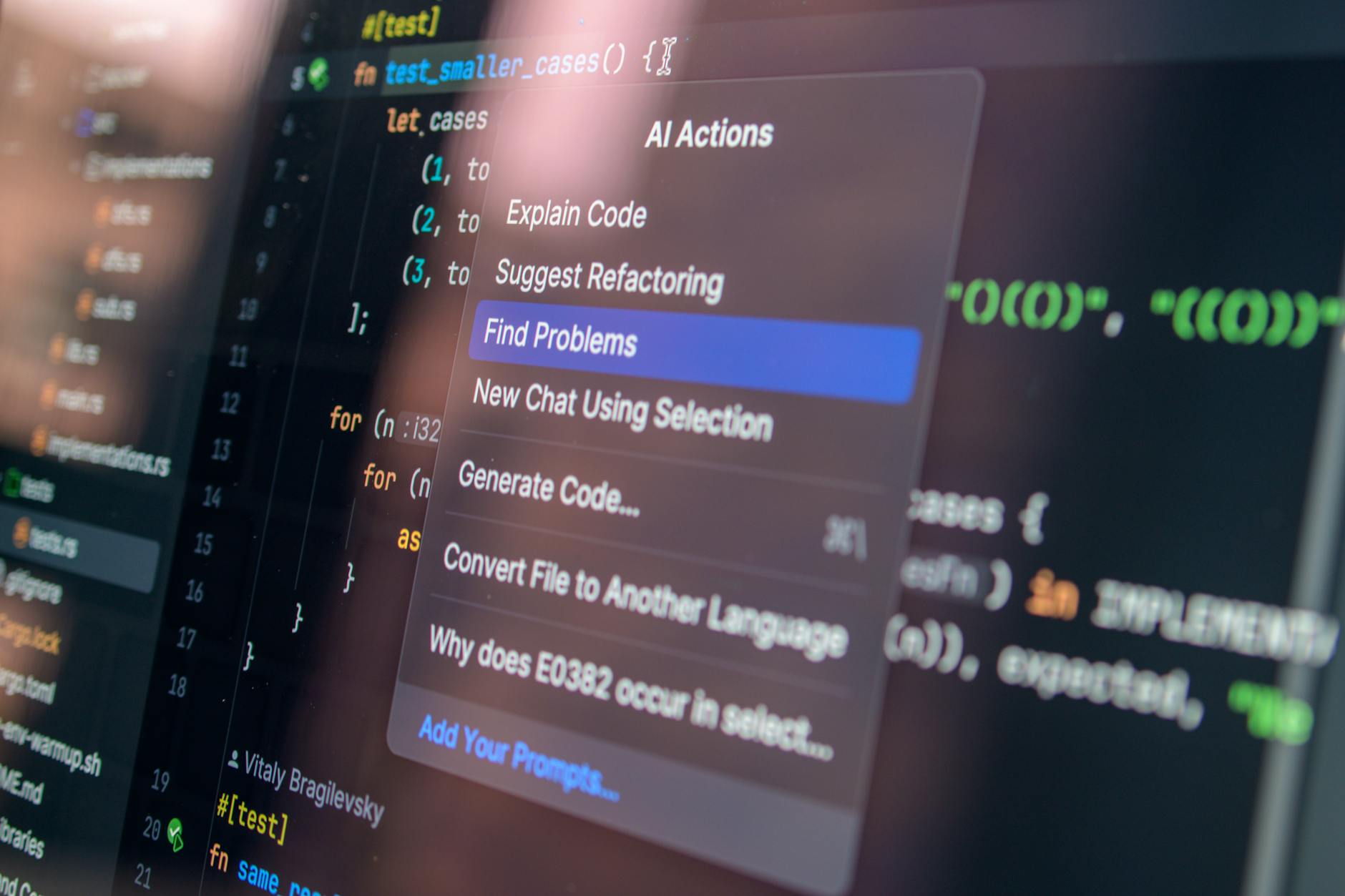

Photo by Daniil Komov

Photo by Daniil Komov

The benchmarks that matter if your AI lives in the terminal

Benchmarks can be boring, but the right ones map to real pain. GPT-5.3-Codex shows a small lift on “real software engineering” tasks, then a much bigger jump on tests that look like daily agent work: terminal control and desktop actions.

Here are the headline numbers OpenAI shared, translated into normal expectations:

| Benchmark (what it tests) | GPT-5.3-Codex | GPT-5.2-Codex | What it implies in practice |

|---|---|---|---|

| S.WE (real multi-language software engineering) | 56.8% | 56.4% | Small change, but these are hard tasks, 1 point can mean fewer broken patches |

| TerminalBench 2.0 (CLI skills and command chaining) | 77.3% | 64.0% | Big jump, better at running tools, reading outputs, and staying “in flow” in a terminal |

| OSWorld Verified (desktop computer use with vision) | 64.7% | 38.2% | Much better at completing UI tasks, not perfect, but it stops failing so early |

TerminalBench is the one that makes developers sit up. It’s not “write a sorting function,” it’s more like: change directories, run tests, parse logs, adjust commands, repeat. A jump from the low 60s to the high 70s is the difference between “nice assistant” and “okay, it can actually drive.”

OSWorld is also worth reading carefully. OpenAI includes a human reference point around 72 percent on OSWorld Verified. GPT-5.3-Codex at 64.7 percent is not “human level,” but it’s close enough that you start to trust it with simple desktop tasks, especially in a sandbox.

If you want a third-party summary that’s less marketing-y but still readable, DataCamp posted a breakdown, GPT-5.3 Codex: from coding assistant to work agent.

Security is now part of the product story, not an afterthought

Here’s the part that changes how companies will deploy this. OpenAI reported a big improvement on cybersecurity capture-the-flag style evaluations: 77.6 percent for GPT-5.3-Codex, up from roughly 67 percent for GPT-5.2 variants they compared against. Those tests are not “hack the internet,” but they do measure whether the model can reason through exploit-like puzzles and security workflows.

Because of that, OpenAI labeled GPT-5.3-Codex as its first model tagged “high capability” for cyber tasks under its preparedness framework. Translation: stronger model, tighter controls. OpenAI also announced a trusted access cyber pilot program, a gated path for vetted security pros.

That combination is the real story. The better these coding agents get, the more they overlap with offensive security skills. So the rollout starts looking like staged access, monitoring, and stricter guardrails, not just “ship it to everyone and hope.”

Anthropic’s Opus 4.6 counters with long context, better memory, and agent teams

Anthropic’s answer with Claude Opus 4.6 feels like a different philosophy. OpenAI is shouting “terminal-ready speed,” Anthropic is saying, “fine, but can your agent hold the whole project in its head?”

Opus 4.6 ships with a 1 million token context window (beta). In everyday terms, that can mean a huge chunk of a codebase plus design docs plus long issue threads, all at once. The point isn’t bragging rights, it’s fewer re-explanations. Anyone who’s tried doing real work with AI knows the annoying loop: you explain, it helps, then it forgets a key constraint from 40 messages ago.

Anthropic also talks openly about “context rot,” the way models can struggle to retrieve details buried deep in long inputs. They point to a big retrieval jump on MRCR v2: 76 percent for Opus 4.6 versus 18.5 percent for an earlier Sonnet 4.5 model. That’s not a small tune-up, that’s the difference between “it skimmed” and “it found the one line that matters.”

Two more practical details: Opus 4.6 supports up to 128,000 output tokens in a single response (so it can draft large chunks of code or docs), and it includes adaptive thinking with effort levels, meaning you can trade speed and cost for deeper reasoning when you need it.

Anthropic’s official post is here, Introducing Claude Opus 4.6. And if you want the broader “why agents are not just chatbots” framing, it pairs well with A new kind of AI is emerging.

Long-context work is really “retrieve the right detail at the right time,” not just stuffing more text in (created with AI).

Long-context work is really “retrieve the right detail at the right time,” not just stuffing more text in (created with AI).

Where Opus 4.6 shows up first, and why that rollout matters

Distribution is half the battle now. Anthropic pushed Opus 4.6 into developer surfaces people already use, especially GitHub Copilot. That means it can show up inside VS Code across chat, ask, edit, and agent modes, plus GitHub.com, mobile, CLI, and the Copilot coding agent, with a gradual rollout and enterprise policies that admins must enable.

That admin toggle piece matters. Enterprises don’t want surprise model swaps inside the IDE. They want governance, cost controls, and sometimes audit trails. So even if a model is great, it still needs a clean “yes, we approved this” path.

GitHub’s own update is here, Claude Opus 4.6 in GitHub Copilot. Microsoft also highlighted availability through its stack, Claude Opus 4.6 on Microsoft Foundry.

Multi-agent teamwork is getting real

Anthropic also introduced agent teams in Claude Code as a research preview. The simplest way to picture it is like splitting a sprint across a tiny AI team: one agent focuses on the front-end, another writes API routes, another prepares database migrations, and they coordinate.

Parallelism is the win. You stop waiting for one agent to finish every subtask in a line. But there’s a catch, and it’s a real one: coordination costs. Agents can reinforce each other’s mistakes, or create incompatible assumptions if you don’t set shared rules, tests, and a review step. When it works, it feels like extra hands. When it doesn’t, it feels like a group project where nobody read the same spec.

So who wins, and what should you do next if you build software

There isn’t one winner, because these models are being shaped for different failure modes.

If your day is terminal-heavy, with lots of “run, inspect, patch, rerun,” GPT-5.3-Codex looks scary good. TerminalBench and OSWorld gains point to an agent that can keep moving through tool chains, not freeze after the first error. OpenAI also says GPT-5.3-Codex was trained and served on NVIDIA GB200 NVL72 systems, which is a reminder that hardware and model design are getting tied at the hip.

If your pain is long projects, huge repos, and repeated context hand-holding, Opus 4.6 is built for that. The 1M context plus improved retrieval means fewer “wait, I already told you this” moments.

My practical advice, and yeah it’s boring on purpose: run both on the same repo task and judge them on acceptance tests, not vibes. Pick one medium-sized change, require tests to pass, and watch the full tool log if your platform shows it. Start with low-risk work (docs, refactors, internal scripts) before you let any agent touch production paths.

On enterprise signals, the market is moving fast. OpenAI still has the widest production footprint (reported around 77 percent of enterprises using its products in production), while Anthropic’s share of enterprise production deployments has climbed sharply (reported around 44 percent by January 2026). Spending is rising too, with average enterprise LLM spend around $7 million in 2025, projected to about $11.6 million in 2026. That money doesn’t show up unless companies think these tools are becoming standard.

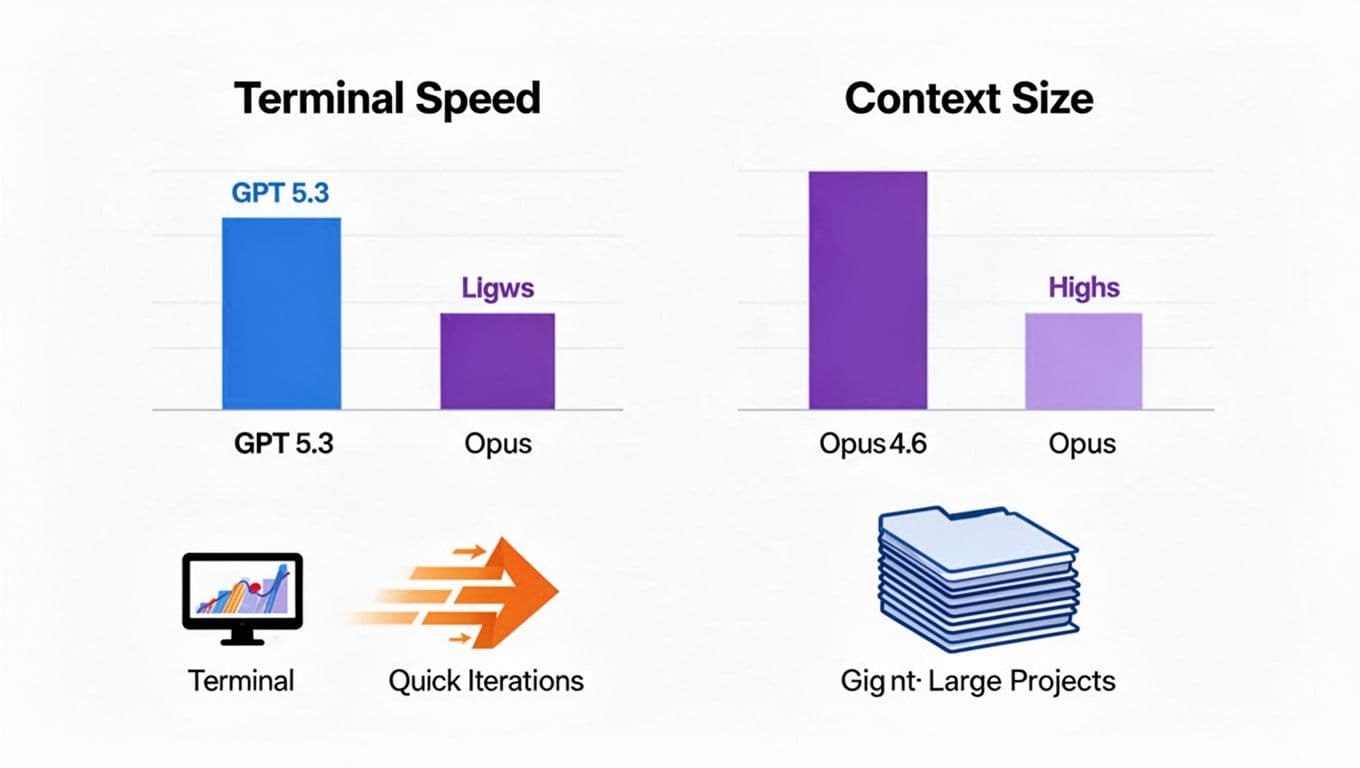

A simple way to choose: terminal execution speed vs massive context and retrieval (created with AI).

A simple way to choose: terminal execution speed vs massive context and retrieval (created with AI).

What this means for jobs, teams, and the next 6 months of AI coding

The question everyone whispers is about jobs. Do teams shrink, or does work just shift?

Near term, it’s mostly a shift. More output per developer, less time on boilerplate, more time on reviewing, testing, security checks, and product decisions. Agents don’t remove the need for responsibility, they raise it. If a model can make 30 changes fast, you need stronger guardrails fast.

You can see the fear in the market too. There was a reported sell-off across some software and services stocks tied to “AI replaces software” anxiety. And there’s also pushback from industry leaders saying mission-critical systems aren’t replaced overnight. That matches reality. Big systems have contracts, compliance, integration debt, and “if it breaks, we lose customers” risk. Agents will enter those systems through the side doors first: migrations, test generation, triage, internal tools.

Next six months? Expect tighter IDE integrations, more agent autonomy in safe sandboxes, and more gated access for models that cross into high-risk domains like cyber.

What I learned while testing these new coding agents (the honest version)

By Vinod Pandey.

In my trials, the biggest change wasn’t “wow, it writes code.” I’m kind of numb to that now. The change was watching an agent behave like it actually belongs in a workflow, not a chat.

One night I had a small but annoying bug in a throwaway Node project. The usual stuff, tests failing, one dependency mismatch, messy logs. The faster, terminal-focused agent style (the GPT-5.3-Codex vibe) helped because it didn’t stop after one suggestion. It kept proposing the next command, reading the output, then adjusting. It felt like pairing with a teammate who doesn’t get tired, and doesn’t get bored after the third rerun. I still had to steer it a bit, like “don’t update major versions,” but the loop was quick.

On the other side, the long-context approach (the Opus 4.6 vibe) saved me from repeating myself. I dropped in a long README, a design note, and a few error traces, and the responses stayed consistent with earlier constraints. That “memory” thing is hard to appreciate until you’ve wasted 20 minutes re-explaining a simple rule like “we can’t change the DB schema this sprint.”

And then the humbling part: one agent made a confident mistake. It suggested a fix that looked clean, passed type-checking, and still broke a real edge case. If I hadn’t run tests, I’d have shipped it. That’s the habit I’m keeping: small tasks first, clear repo instructions, a written definition of done, and never skipping the test run even when the agent sounds sure. Confident errors are still errors, just faster.

Conclusion

This AI war isn’t about who has the prettier demo. It’s a race to become your daily dev teammate, the one you trust with real work. GPT-5.3-Codex looks strongest when terminal skill, tool use, and fast iteration matter; Claude Opus 4.6 shines when long context, retrieval, and coordinated agent work matter.

What workflow do you want automated next, and what would make you trust an agent more, stricter tests, full action logs, approvals, or sandboxing?

0 Comments