Picture a room where the music never loops. Not “a long playlist,” not “8 hours of ambient,” but sound that keeps changing in real time, second by second, and doesn’t come back to the same place. You could stay for an hour, a day, a week, and still not hear the same moment twice. It feels a bit like standing under a night sky where the stars rearrange themselves as you watch.

An audio waveform visualized with subtle quantum-style particle effects, created with AI.

An audio waveform visualized with subtle quantum-style particle effects, created with AI.

That’s the promise people are circling right now with Quantum AI, especially as we hit February 2026 and the conversation shifts from “cool lab demo” to “real use.” In simple terms, Quantum AI is a blend of AI techniques with quantum computing methods, where some of the variation can come from quantum behavior (probability and measurement) instead of only from patterns learned in old datasets.

And that last part is the tension point. Regular AI music tools usually learn from huge libraries of human music. Quantum-flavored systems can be built to generate from rules plus quantum randomness, which changes what “original” even means and (maybe) changes the copyright headache too.

What makes Quantum AI music different from the AI songs you have heard so far

Most AI music you’ve heard in the last couple years follows a familiar recipe. Feed the model tons of songs, let it learn what tends to come next, then ask it to make a new song “in the vibe of” something. Even when the output is fresh, it’s still built on learned patterns from existing catalogs.

Quantum AI music can be approached differently. Instead of leaning on a giant style library, it can use quantum randomness as a core ingredient for generating new sequences and textures, then shape those outcomes with constraints you choose (tempo, density, instrument palette, energy level). That doesn’t mean “quantum equals better.” It means the system is exploring a different kind of space.

A quick everyday comparison helps:

Traditional AI music is like a chef who learned by tasting 50,000 dishes, then improvises something similar.

Quantum-driven generation is closer to rolling a lot of strange dice at once, then cooking with the result, while still following your diet rules.

If you want context on how quantum systems are already being used in music experiments and composition, IBM has a solid explainer in Quantum compositions and the future of AI in music.

Pattern-learning AI vs. quantum randomness, the clean mental model

Pattern-learning AI is a prediction machine. Given what it’s seen before, it guesses what usually comes next. That’s why it’s so good at “sounds like” outputs.

Quantum randomness changes that feel. A quantum process can produce outcomes that are genuinely unpredictable in a way that’s not just pseudo-random. The system can sample many possibilities, then “collapse” into one measured result, and that result becomes part of the musical path.

You still need structure, unless you want noise. So the practical model is: rules plus quantum variation. The rules keep the music coherent; the quantum variation keeps it from tracing the same grooves again and again.

Why “never repeats” is a big deal for background sound

“Never repeats” sounds like marketing until you sit with it.

Most background music today is loop-based. Even long playlists turn into repetition because your brain is annoyingly good at noticing patterns. In retail, waiting rooms, and lobbies, that loop fatigue is real. In videos and podcasts, repetition turns into listener drop-off. In meditation apps, it can become a mild irritation you can’t un-hear.

Continuous generation changes the unit of music from “track” to “stream.” Instead of exporting a 3-minute file, the system can produce an evolving soundscape that keeps moving. That’s where Quantum AI starts to feel less like a novelty and more like a tool that fits actual places people spend time.

The rules it breaks, and why listeners notice right away

The first time you hear truly non-repeating music, your brain goes looking for the handrails. A chorus. A familiar return. A predictable resolution.

Then it doesn’t happen.

That’s the “breaks every rule we know” moment, not because the music is louder or faster, but because it refuses to behave like a normal song. Standard pop structures rely on repetition for memory. Even a lot of ambient music repeats in softer ways, like the same pad cycling every 8 bars. Quantum AI music can be designed to keep evolving, so the comfort comes from mood, not from a hook.

A music timeline that keeps extending without loops, created with AI.

A music timeline that keeps extending without loops, created with AI.

If you want a concrete example of quantum plus AI entering public music releases, The Next Web covered Moth and ILĀ’s track “Recurse” as a quantum-powered generative AI song. Whether you love it or not, it’s a sign the idea is leaving the whiteboard.

No verse-chorus safety net, just constant evolution

Most songs are built like houses with repeating rooms. You recognize where you are. Verse, pre-chorus, chorus, bridge, chorus. Even when it’s creative, it’s stable.

Quantum AI music can be built more like weather. A front moves in, light rain, then a clearing, then wind, then calm again. The music can still have arcs, but it’s not trying to make you sing along.

That’s why the early sweet spot isn’t likely to be chart pop. It’s functional music: background audio, game atmospheres, film tension beds, spa soundscapes, study music. Places where the job isn’t “memorize this,” it’s “support this moment.”

Micro-surprises, new textures, and sounds that feel “unheard of”

Here’s what people mean when they say it sounds alien. It’s often not the notes, it’s the texture.

The sound can keep shifting color. A clean synth becomes grainy. A soft pulse becomes a cluster. A bright bell tone gets smeared into something like glass underwater. Those micro-surprises can show up every few seconds, but still stay inside the mood you asked for.

And it’s not chaos unless you design it that way. Constraints still matter. You can keep it calm, slow, minimal, and let the variation happen in tiny details. That’s the trick: freshness without stress.

The copyright shockwave, why Quantum AI could sidestep the usual lawsuits

AI music in 2024 and 2025 picked a fight with copyright, whether companies wanted it or not. When a model trains on huge catalogs of existing songs, the debate isn’t just philosophical. It becomes legal, financial, and messy. Artists argue their work was used without consent. Companies argue fair use or licensing. Users get caught in the middle when a platform flags a track.

Quantum AI shifts that debate because it can reduce dependence on training libraries. If a system’s output is generated from original rules plus quantum randomness, and not learned by ingesting copyrighted catalogs, the argument for “this is a derivative remix machine” gets weaker. Some people even describe it as mathematically provable originality in the sense that it is not copying a known sequence, it’s sampling from a source of physical randomness. Still, the law doesn’t run on vibes, so it’s smart to treat this as risk reduction, not a magic shield.

For a broader sense of how crowded the AI music market is getting right now, Billboard’s snapshot of AI music companies shaping 2026 is a useful reality check. Most of the market is still non-quantum, and the legal pressure is still very real.

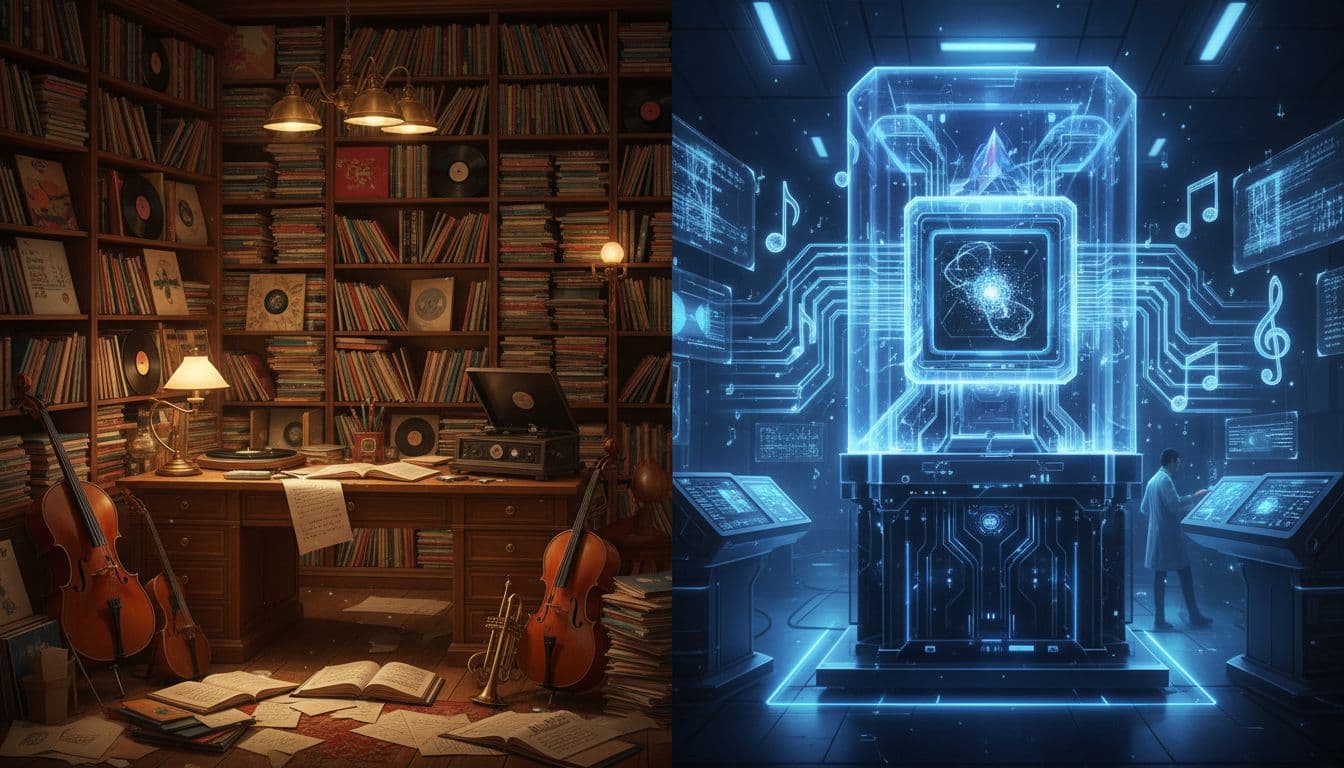

Two approaches side by side, learning from old catalogs versus generating from new randomness, created with AI.

Two approaches side by side, learning from old catalogs versus generating from new randomness, created with AI.

From “it sounds like that artist” to “provably new patterns”

Creators don’t want a courtroom debate. They want a simple outcome: fewer takedowns, fewer claims, fewer sleepless nights before a campaign launch.

The practical difference is this: if your tool is trained to imitate styles from existing catalogs, you can accidentally land near a known song’s fingerprint. If your tool is built to generate from a different source, quantum randomness plus original constraints, you’re less likely to drift into “that sounds like X.” It changes the conversation from “who did it learn from?” to “what system made it, and what inputs shaped it?”

Again, not a legal promise. Just a different starting point.

What creators and brands can do to stay safe anyway

Even in a best-case world, you should keep receipts. A simple workflow helps:

- Pick tools with clear provenance: Look for documentation on how music is produced and what data sources are used.

- Keep a creation log: Save prompts, settings, version numbers, and timestamps.

- Use platform-safe policies: If you publish on YouTube, TikTok, or streaming platforms, follow their music guidelines.

- Get a legal review for big spends: Ads, film releases, major games, and brand campaigns deserve real counsel.

It’s boring paperwork, yes. It also saves your future self.

Where this hits first, and who gets the first-mover advantage

The loudest fear is “AI will replace musicians.” The quieter truth is more specific: the first wave tends to hit functional creative work. Music that exists to fill space, set tone, or support another product. That’s where continuous, non-repeating audio has a clear job to do.

This connects to a bigger pattern some of us have been watching since 2025. First it was “agents” handling tasks without much supervision. Now in 2026, AI is showing up in more embodied, real-time ways, not just text on a screen. Music that generates live, adapts live, and never repeats is part of that same shift. The speed is what gets people. Something that sounds wild in January can feel normal by next winter.

In my view, the advantage window is real but short. Early adopters get to build new workflows, new libraries, and new licensing models while everyone else argues on panels.

A calm studio scene where endless, non-repeating audio fits naturally, created with AI.

A calm studio scene where endless, non-repeating audio fits naturally, created with AI.

Content creators, endless platform-safe background music on tap

If you make YouTube videos, podcasts, shorts, reels, or livestreams, you’ve probably felt the music problem in your bones. You need background audio that fits your mood, doesn’t annoy repeat viewers, and won’t trigger claims.

Quantum AI style continuous music could become “background music on tap,” tuned to your pace and vibe. Fast edit? Give it more motion. Slow tutorial? Keep it warm and steady. And because it can keep evolving, you don’t get that obvious 30-second loop that makes people click away.

Games, films, and live sets, soundtracks that react in real time

Games and interactive media are a perfect match, because they already run on state changes. Player enters a cave, health drops, enemy appears, the music should react. Many games do this with layered loops. Continuous generation can make it feel more alive.

Film can use it too, especially for long scenes where looping beds become noticeable. The music can track the emotional slope without repeating the same bar structure.

And DJs and producers? This is where things get fun. Hybrid sets where the artist steers, filters, and cues, while the system spins unexpected layers underneath. Not replacement, more like a second mind in the room that doesn’t get tired.

What I learned playing with this idea, excitement, weirdness, and a small reality check

I sat with an early “endless” generator concept recently and told myself I’d listen for five minutes.

It turned into ten, then fifteen. I was waiting for the tell. The moment where the loop comes back, or a phrase repeats too neatly. It didn’t. Not in any obvious way.

The weird part was my body reaction. I felt impressed, then slightly unsettled, like my brain couldn’t file it. Music normally gives you little returns, little landmarks. Here it was more like walking in fog with a good compass. You know the direction, but you don’t see the same tree twice.

There was an imperfect moment too, a brief awkward dip where the texture thinned out and I thought, “Oh, it broke.” Then it swelled back in a new form, like it meant to do that. Maybe it did. Maybe it didn’t. That’s kind of the point.

Here’s my reality check: none of this replaces meaning. You still need taste. You still need context. You still need to know what the music is for. A meditation track needs trust and calm. A retail space needs energy that doesn’t irritate staff by hour four. A film scene needs emotional intent. The system can generate sound forever, but it can’t decide why the sound should exist. Not on its own.

And yeah, the pace is intense. In the 2025 to 2027 window, tools that feel unreal can become normal fast. If you work with audio in any form, it’s worth paying attention now, while it’s still weird.

Conclusion

Quantum AI changes music by changing the source of novelty. Instead of re-mixing patterns from huge song libraries, it can generate continuous, non-repeating sound shaped by constraints and quantum randomness. That shift can also reshape the copyright conversation, not by guaranteeing safety, but by reducing the “trained on your catalog” argument that fuels so many disputes.

The first impact won’t be replacing singer-songwriters. It’ll show up in functional music: creators, wellness, retail, games, film beds, and live hybrid sets. If your work depends on background audio, experiment early, document your process, and keep your human taste in charge.

0 Comments