Apple just made a very un-Apple move. Instead of keeping the brains of its next big software shift fully in-house, it’s bringing Google’s Gemini models into the center of iPhone AI.

If you’re an iPhone user, the practical headline is simple: AI features, including a much smarter Siri, should get better faster in 2026. (In plain English, AI here means software that can understand and generate language, images, and actions, kind of like an assistant that can reason through requests.) Under the hood, what Apple is buying from Google is a “foundation model,” which is basically a big, general-purpose model that can be adapted into lots of smaller features, like rewriting text, summarizing notifications, or handling multi-step tasks.

This surprised people because Apple usually prefers tight control, and because it had been leaning on OpenAI for some Apple Intelligence pieces. Now the big question is the one everyone cares about: who wins, who loses, and what changes the moment you pick up your phone?

What Apple and Google actually agreed to, and why it matters

Apple and Google symbolically “meet in the middle” as the AI partnership becomes official, created with AI.

Apple and Google symbolically “meet in the middle” as the AI partnership becomes official, created with AI.

Apple and Google announced a multi-year partnership in January 2026 that makes Google’s Gemini a core engine behind the next wave of Apple Intelligence, including the revamped Siri. Reporting across outlets describes it as a meaningful shift in Apple’s plan after a year of delays and public pressure, with Apple positioning Gemini as the “foundation” for key features (you can see a mainstream breakdown in this CNBC report on Apple choosing Gemini for Siri).

Here’s the part that matters: Apple isn’t just adding “a chatbot.” It’s tying Siri and other everyday features to a model stack that can handle language, images, and actions. That’s why this deal hits differently than, say, swapping a maps provider.

Privacy is the other big pillar. Apple’s public line is that user data still gets protected through a mix of on-device processing and Apple-controlled cloud processing (the same general idea as its Private Cloud Compute approach). In other words, the promise is that Gemini helps power the intelligence, but Apple keeps tight boundaries around what data goes out, and what partners can do with it.

Money is the uncomfortable part, because it’s the part nobody fully confirms. Multiple reports point to Apple paying Google around $1 billion a year for access to Gemini, but the exact terms have not been publicly disclosed. Think of that figure as “widely reported, not officially itemized on a stage.”

And timing is finally getting concrete. Coverage suggests the biggest Siri upgrades arrive with iOS 26.4, expected around March or April 2026. That matters because Apple has been selling the future of iPhone software for over a year now, while users waited.

What changes for Siri and Apple Intelligence in 2026

A more conversational Siri is the real-world face of the Gemini partnership, created with AI.

A more conversational Siri is the real-world face of the Gemini partnership, created with AI.

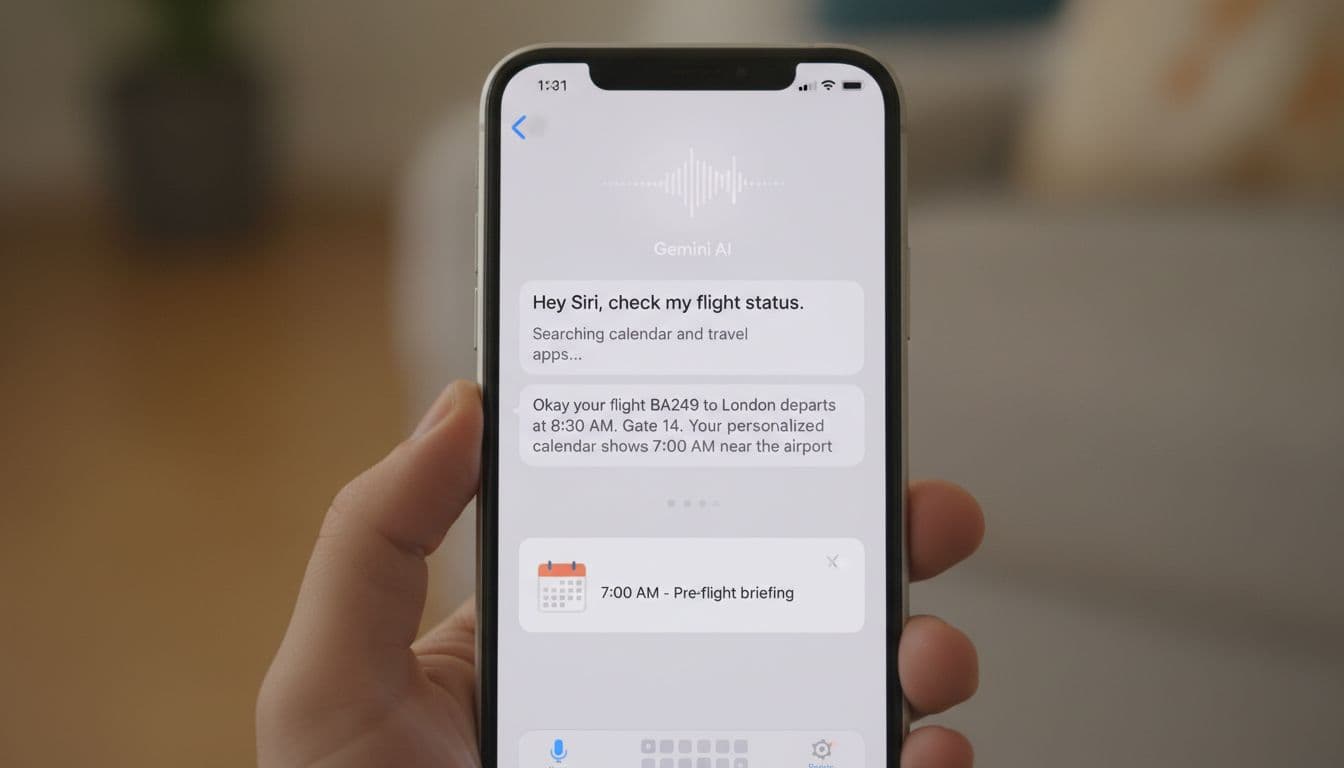

A Gemini-powered Siri should feel less like a voice command tool and more like a helper that can hold context.

In real life, that usually shows up in boring, valuable moments:

You ask, “What time does mom land?” and Siri can connect the dots across your emails, texts, and calendar, then follow up with “Do you want me to text her that you’ll be there?” without you repeating yourself.

You say, “Move lunch to next week and tell Alex,” and Siri doesn’t just open the calendar. It makes the change and drafts the message, with the right date, and checks for conflicts.

This is the stuff Siri has struggled with. Apple had teased a more personal assistant experience, then pushed timelines, then pushed them again. That delay created real frustration, especially after marketing for recent iPhones set expectations that some features didn’t meet right away. Some customers even took legal action, which is rare air for Apple, and not the kind you want.

If Google’s models help Siri stay coherent across follow-ups, understand what’s on your screen, and complete tasks inside apps, it’s a noticeable upgrade. It’s also the kind of upgrade people don’t “benchmark.” They just feel it, on a rushed Tuesday morning.

For more context on how the deal changes Apple’s AI story, this overview from The Verge on what the Gemini deal means frames it as a platform decision, not a feature add-on.

Why Apple picked Google over OpenAI or Anthropic

Apple has a hard rule that quietly shapes everything: it doesn’t let partners train on Apple user data. So if you’re a model provider, you can’t treat iPhone usage like free fuel. That pushes the selection process away from “who has the best demo today?” and toward “who can meet Apple’s privacy, IP, and scale requirements without drama?”

One theory that fits the pieces is that Google’s control over its ecosystem made the guarantees easier. Google runs its own cloud and has custom AI chips (TPUs) that can lower cost and speed up inference at scale. If Apple wants strict processing boundaries plus predictable economics, that kind of vertical control can look attractive.

There’s also a messaging win for Google: Apple reportedly described Gemini as the “most capable foundation” for Apple Foundation Models. That’s not just polite partner talk. That’s Apple, the fussy one, handing out a gold star.

Still, this isn’t described as an exclusive deal. Apple can keep using other models for certain Apple Intelligence features, and it can swap providers over time. It’s more like Apple is building a toolbox, and Gemini just got the biggest slot.

If you want a broader view of how fast “best model” shifts year to year, the piece on RiftRunner and the AI Whisper War captures the weird reality: leadership changes quickly, and quietly.

What Google wins, and what could backfire for Google

The upside for Google is a mix of revenue and reach, with privacy constraints in the middle, created with AI.

The upside for Google is a mix of revenue and reach, with privacy constraints in the middle, created with AI.

For Google, this deal is part paycheck, part distribution jackpot, and part reputation repair.

Google has taken hits in public perception during the last couple years of generative AI chaos. It also had some very public product stumbles early on, the kind you can’t fully erase with a blog post. So getting picked by Apple is a different sort of validation. Apple doesn’t hand out compliments easily.

But let’s not pretend this is charity. Google wants two things: money and behavior change.

A direct money win and a massive distribution win

Start with the obvious: recurring revenue. Reports have floated a figure around $1 billion per year paid by Apple, though neither side has laid out the contract in full public detail. That’s real money, even for Google, and it’s likely tied to a multi-year view.

Then there’s the bigger prize: distribution.

There are roughly 1.5 billion iPhone users worldwide. If Siri becomes the front door for everyday actions, and Gemini is behind the door, Google is suddenly present at the exact moment people search, decide, and buy.

That has a few angles:

Product discovery: Siri becomes a place where people ask “What should I buy for…” and an AI model is effectively shaping the shortlist.

Purchases and intent: If Siri helps you compare options and complete actions, the assistant can steer where intent turns into dollars.

The long game: Today it’s “Gemini inside Siri.” Tomorrow it could be deeper Gemini experiences, and in the most aggressive version, maybe even a pre-installed Gemini app. Nobody has confirmed that. But the “foot-in-the-door” logic is the kind of logic Big Tech runs on.

You can see how analysts are framing this as a major validation moment in coverage like Fortune’s take on Apple Intelligence powered by Gemini.

The risks, trust issues, and regulatory pressure Google still faces

The risk is simple: some people just don’t want Google anywhere near their assistant, period.

Even if Apple says processing is protected, even if data boundaries are strict, trust isn’t binary. It’s vibes, history, and headlines. And Google has a long history of being associated with ads and tracking, fair or not, so the burden of proof is higher.

Regulation is the other shadow. Google’s distribution deals have been watched for years, and tighter rules could change the economics of paying for default placement and default access. This Apple deal is not identical to the old “search default” fights, but it lives in the same neighborhood, and regulators love that neighborhood.

Still, for Google, it’s probably worth it. If you’re trying to protect your future in a world where assistants answer questions before users ever “search,” you don’t sit this one out.

What it means for OpenAI, the $500 billion upstart, and the next device war

OpenAI faces a distribution problem when rivals show up built-in, created with AI.

OpenAI faces a distribution problem when rivals show up built-in, created with AI.

If Google is the big winner, OpenAI is the obvious loser, at least in the near term.

This isn’t about model quality in a vacuum. It’s about where people actually use AI. Most people don’t wake up and think, “Time to evaluate language models.” They use what’s already there.

The phrase “$500 billion upstart” has floated in recent coverage, and it fits the vibe: OpenAI is huge, but it’s still the newcomer compared to Apple and Google. This detailed write-up from Fortune on what the deal means for Apple, Google, and OpenAI is one of the clearest summaries of why distribution is the real battlefield.

Losing the default spot hurts growth, even if ChatGPT is still huge

OpenAI isn’t small. ChatGPT is one of the most recognized consumer AI products on earth, and it reportedly serves hundreds of millions of users weekly.

But default placement is different. Built-in distribution changes habits. It changes what people recommend to friends. It changes what “normal” feels like.

If iPhone users start getting answers through Siri that feel sharp, personal, and fast, they might stop thinking “I’ll open ChatGPT for that.” Not because they dislike ChatGPT, but because they forget. And forgetting is deadly in consumer tech.

This also strengthens the story that Google “caught up,” and maybe passed OpenAI in some areas. Stories don’t need to be perfectly true to become sticky. They just need enough proof points.

If you’re tracking how OpenAI has been pushing into everyday usage beyond the app, this look at ChatGPT Atlas and conversational browsing shows the same strategy: win the surface where people spend time, not just the model leaderboard.

How this affects OpenAI’s plans for new AI hardware

OpenAI has talked about working on a new kind of AI device (with help from Apple’s former design chief Jony Ive). The pitch, broadly, is a “post-phone” interface, something that makes talking to AI feel natural without pulling out a slab of glass every time.

Apple’s shift to Google changes the chessboard here in a few ways.

First, it reduces OpenAI’s “inside view” into Siri’s next chapter. If you’re building a device meant to compete with the phone as an AI hub, you want to understand the phone’s assistant deeply.

Second, it raises the bar for what a new device has to do. If Siri becomes genuinely helpful, fewer people feel the itch to try a separate gadget. Convenience is a brutal competitor.

Third, it pushes OpenAI toward building an even tighter ecosystem, because distribution is now the pain point. In 2026, ecosystems aren’t just nice, they’re defensive walls.

If you want a forward-looking view on why 2026 is shaping up as an “agent” year, and why assistants will act more than they talk, this piece on what AI will look like in 2026 is a good framing.

What I learned watching this deal, and what it means for regular people

For users, the deal matters most in everyday moments, not press events, created with AI.

For users, the deal matters most in everyday moments, not press events, created with AI.

I’ll be honest, my first reaction was kind of mixed. I like Apple’s privacy posture. I also like when Siri actually works. And I don’t love the idea of any one model becoming the default brain for billions of people.

Sitting with it for a day, a few lessons keep coming back.

Privacy is a product feature now. People don’t read whitepapers, but they do notice when a company speaks clearly about where their data goes. Apple’s stance that partners can’t train on iPhone user data isn’t just a policy detail. It’s a bargaining chip.

Distribution beats demos. The best model on a benchmark doesn’t matter as much as being one tap away. If Siri becomes the place where “asking AI” happens for iPhone users, that’s a habit shift you can’t easily buy back.

Apple partners when it needs time. Apple loves owning the stack, but it also hates missing a platform shift. This looks like Apple buying time to improve its own models, work on compression and efficiency, and still ship a better experience in 2026.

The “best model” label changes fast. A year is a long time in AI. Two years is forever. Today it’s Gemini as the foundation. Later it could be a different mix, or Apple’s own models taking more of the load.

If you’re a regular iPhone user, here’s what I’d actually watch for, not the hype.

Check your AI settings when the update lands. Look for toggles around on-device processing, cloud processing, and which features can access things like Mail, Messages, and Calendar. If Siri gets more personal, it will need permissions, and you should choose those on purpose.

And treat the assistant like a helpful intern: great at drafts and busywork, still needs oversight.

Image notes (5 total):

- Apple and Google partnership handshake: Caption idea, “A high-level partnership with consumer AI stakes.”

- Gemini-powered Siri on iPhone: Caption idea, “Siri becomes better at follow-ups and multi-step tasks.”

- Secure data flow visualization: Caption idea, “Where privacy boundaries and money meet.”

- User comparing Siri and ChatGPT: Caption idea, “Default placement vs. app choice.”

- People using AI features in daily life: Caption idea, “The deal only matters if it saves time.”

Conclusion

Apple’s AI deal with Google is a practical move with big ripple effects. Apple gets a faster path to a smarter Siri in 2026, plus breathing room to keep improving its own foundation models without taking another year of heat. Google gets new revenue and, more important, a deep channel into how about 1.5 billion iPhone users ask questions and make decisions. OpenAI, even as the upstart with massive reach, loses a prime distribution lane and now has to fight harder for mindshare on the most popular phone platform.

2026 is the proving year. Don’t judge this by press quotes. Judge it by whether Siri actually finishes the job, whether privacy choices are clear, and whether the assistant saves you real minutes each day.

0 Comments